NeuLens: Spatial-based Dynamic Acceleration of Convolutional Neural Networks on Edge

System Overview

System OverviewAbstract

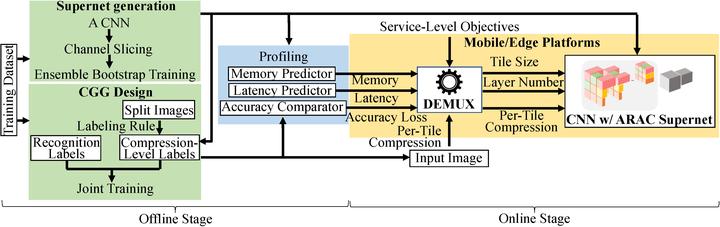

Convolutional neural networks (CNNs) play an important role in mobile and edge computing systems for vision-based tasks like object classification and detection. However, state-of-the-art methods on CNN acceleration are trapped in either limited practical latency speed-up on general computing platforms or latency speed-up with severe accuracy loss. In this paper, we propose a spatial-based dynamic CNN acceleration framework, NeuLens, for mobile and edge platforms. Specially, we design a novel dynamic inference mechanism, assemble region-aware convolution (ARAC) supernet, that peels off redundant operations inside CNN models as many as possible based on spatial redundancy and channel slicing. In ARAC supernet, the CNN inference flow is split into multiple independent micro-flows, and the computational cost of each can be autonomously adjusted based on its tiled-input content and application requirements. These micro-flows can be loaded into hardware like GPUs as single models. Consequently, its operation reduction can be well translated into latency speed-up and is compatible with hardware-level accelerations like cuDNN. Moreover, the inference accuracy can be well preserved by identifying critical regions on images and processing them in the original resolution with large micro-flow. Based on our evaluation, NeuLens outperforms baseline methods by 47.9% latency reduction with the same accuracy and by 67.9% accuracy improvement under the same latency/memory constraints.